At the start of the month, we released our Market Trends Snapshot – an overview of what’s going on in ecommerce right now. As we all know, these are unprecedented times and the data continues to very vividly speak to that.

We hosted a webinar last week presenting a deeper dive into the findings. During this, we provided in-depth analysis of the overall sales, web traffic and review engagement/submission trends highlighted in the original snapshot data. We also looked at what has happened to consumer review length over the past few months – and provided some tips on how you can encourage longer 5-star content.

You can check out the recording of the webinar here. Otherwise, read on for an in-depth overview of all we covered plus the answers to questions posed by webinar participants.

Contents

What is the PowerReviews Market Trends Snapshot?

These insights come from analysis of consumer behavior across more than 1.5m product pages on more than 1,200 brand and retailer sites throughout between February 24 and April 24 2020. So they can be considered highly representative of existing market trends. It is published on the first of every month at PowerReviews.com/Insights.

Summary recap

Traffic up, while order volumes hit a “new normal”?

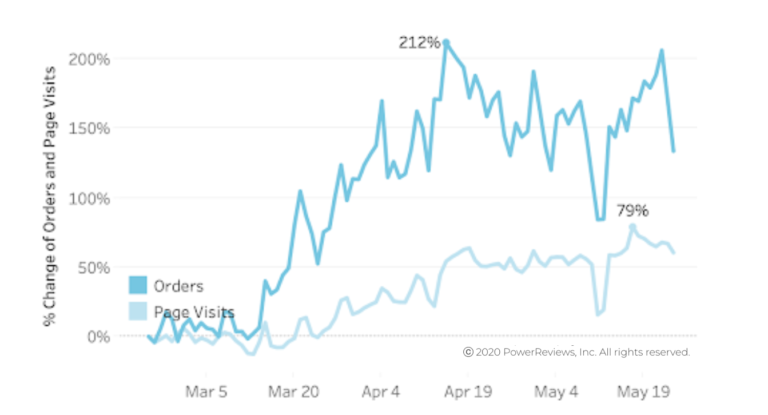

As a reminder, we started this series to measure the impact of the pandemic on consumer behavior. All growth rates are therefore pegged relative to levels recorded at the end of February (pre-pandemic timing).

After seeing skyrocketing ecommerce order volumes at first in March and then continuing through April, things started to level off through May. We haven’t surpassed the 212% increase we saw in mid-April, although we did cross the 200% increase threshold once in May (around Memorial Day).

For the most part, ecommerce orders were up between 150%-200% throughout the month. Of course the big question on everyone’s mind is will it last as stores reopen? It’s difficult to predict. We know that many regions in the US began to reopen at some point during May, particularly in the back half of the month. And yet it was then we saw the highest increases. This could potentially be explained by the fact this is typically a popular time for start-of-summer promotional activity. So one to watch for sure.

As for site traffic, that is slightly higher in May than previous months – but overall also appears to be pretty steady.As we have already mentioned in previous months’ reports, shoppers were doing a lot of buying rather than browsing at the beginning of the COVID era. We put this increase down to the return of more typical shopping habits.

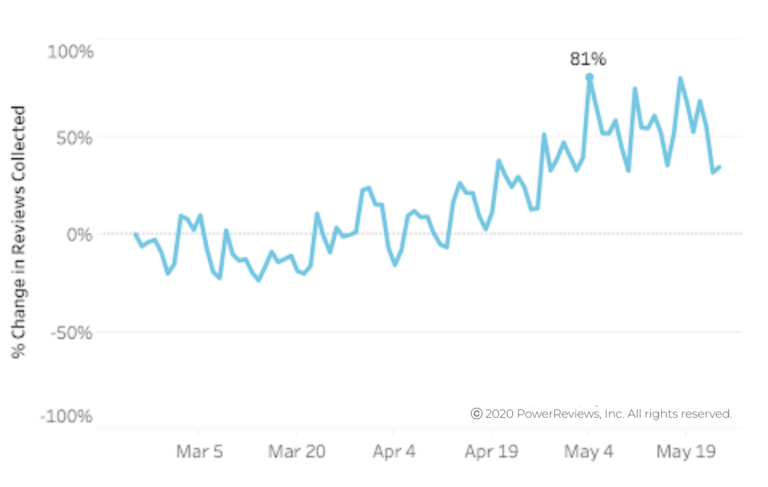

Review submission volumes up 2.3x in May

Review submission volumes are a lagging indicator (because they aren’t usually solicited until a few weeks after orders are placed). So the increases we see above are in line with our expectations.

However, the extent of the increase is significant and worthy of note. The movement from 36% (in April) to 81% above late-February levels represented a 2.3x jump in review submission volumes in a month.

Next month, we may see this stabilize a bit more. However, this still highlights what a major opportunity this existing period represents for brands and retailers to continue to solicit customer content.

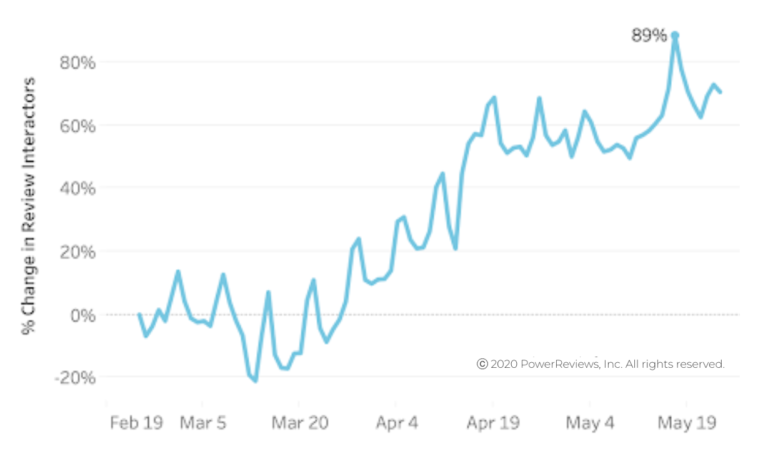

Review engagement increases

The above chart highlights consumer engagement with review content. Not only are customers writing reviews at an increased rate, they are reading them in greater volumes too.

For clarity, we define engagement as any sort of interaction with the review display (clicking a filter, sorting, etc). As you can see, engagement peaked at 89% (above late February levels) in late May. This took them way above what we saw prior to the pandemic.

It seems that as browsing becomes more prevalent in the shopping journey, review engagement is playing an increasingly more important role in the purchasing decision.

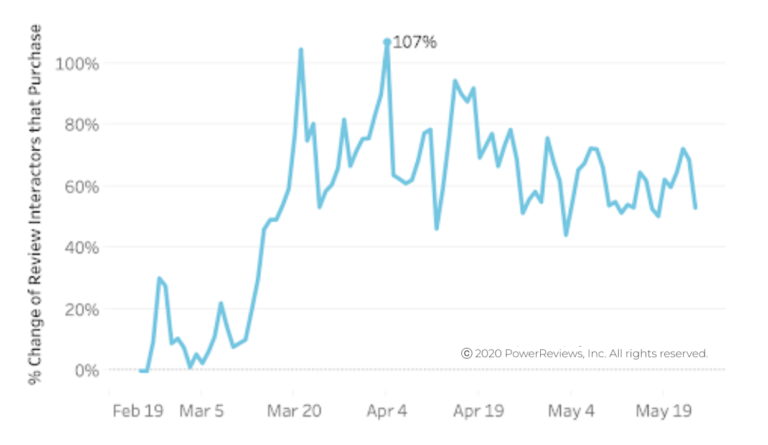

Review content continues to have big impact

To underscore this point, we isolated review engagement among purchasers only. Among this group, you can see that the increase in engagement has been huge. It doubled early in March, in line with increased order volumes. This engagement then remained consistently high, up 60% relative to pre-Covid times throughout May.

Again, this just underscores the importance of offering review content on your site. It provides exceptional validation and social proof to enable shoppers to make the best purchasing decisions possible.

Spotlight: Product Ratings, Customer Sentiment, and Review Length

Given that reviews continue to be a huge factor in the digital customer journey – whether shoppers end up purchasing or are just browsing – we wanted to take a deeper look. So for the rest of this blog, we focus primarily on review length.

How are existing market conditions affecting review length? Has it gone up or down as folks have flooded online? Do review ratings correlate to review length and in what way? And most, importantly, what can you do to create better review content that will convert browsers to buyers?

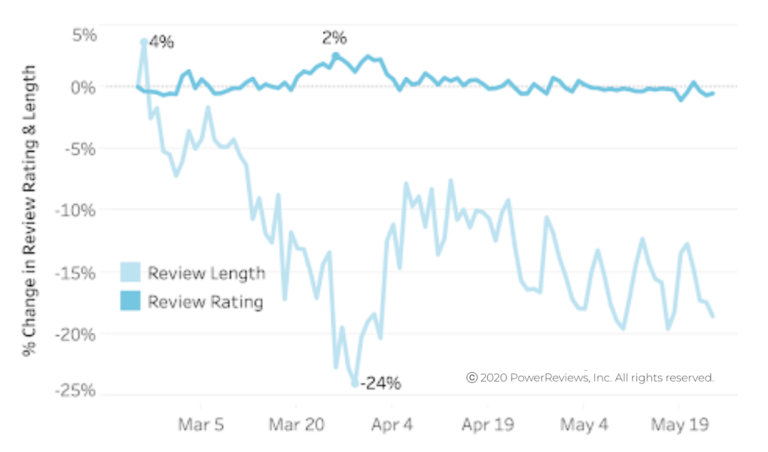

Review length stabilizes, ratings unchanged

With consumers at home with a ton of time on their hands, you may think they may be investing more time in creating review content. But that’s not actually proving to be the case.

Since our analysis began three months ago, we’ve found that the star ratings have remained stable but review comments are getting shorter. When we first saw that 24% dip in commentary length in March we said we’d keep an eye on it. In April, we saw a slight rebound. However, in May, this upward trajectory did not continue. So we thought it was time to dig a little deeper and also offer some tips about what you can do to reverse this trend in your business.

Review length in real terms...

This chart is the same as the one above but converted to looking at review length by character count. A character count that has decreased 20% may seem a pretty drastic change. As we reported in last month’s webinar, we know that the average review length of all the data ever captured by all PowerReviews clients comes in 154 characters. The average for the last three months: 133 characters.

When we dig a little deeper, you see the peak of 159 characters at the start of the tracking period. This figure falls to 117 in mid-March, a 42 character drop. But this then rebounds to around 140 characters before stabilizing in the 130 range.

These differences are fairly miniscule in real terms. 40 characters typically works out at 4-6 words. This may not seem like a lot but let’s take a closer look.

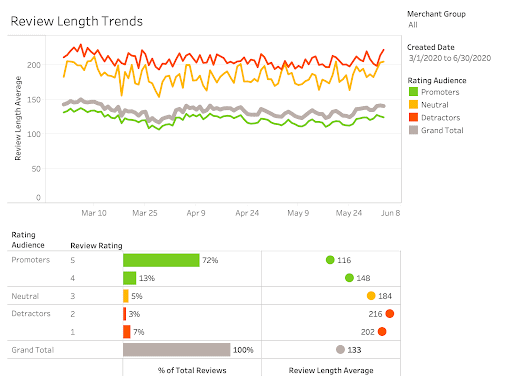

Low ratings correlate to long reviews, and vice versa

What happens when we start cross-referencing rating with character count? Note: we call any 4-5 star rating a promoter, 3 star rating neutral and 1-2 star rating a detractor.

Based on the above, positive-rated reviews clearly tend to be shorter than negative reviews. Intuitively, this makes sense. You’ve probably seen a lot of review comments out there that say simply “exactly as described”, “this thing is great” or similar. Negative reviews – on the other hand – tend to have more detailed explanations of why the reviewer didn’t like the product.

Negative reviews actually average more than 200 characters pretty consistently. But positive reviews track more closely to what we described across all our data. Why? Most reviews are positive. In fact, the bottom chart here highlights that a whopping 85% of product reviews are 4 and 5 star rated.

Now let’s look more closely at the context of these character counts and how they’re being impacted.

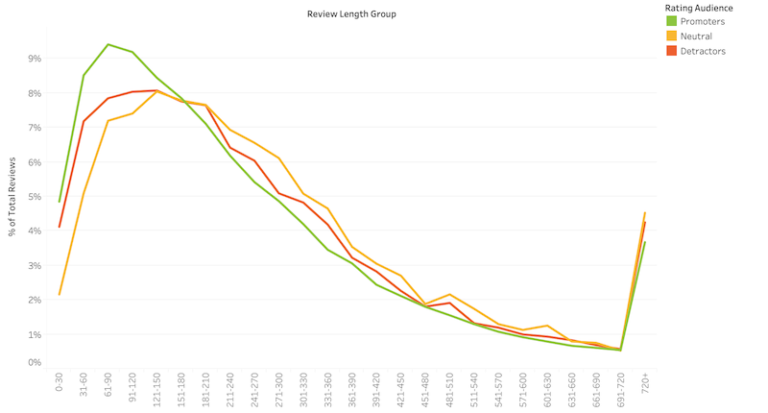

If we go a little deeper, here you can see the distribution of review commentary length (at 30 character) intervals by positive, neutral and negative ratings (as per the definitions outlined in the previous section).

You can see that the peak of the positive review curve in green is narrower and shifted more to the left than the other two lines. Nearly 10% of positive reviews are 60-90 characters in length.

But what does this actually mean? Here is a real review from one of our customers that would fall into this bucket. It states: “Love this jacket! Super comfortable and flattering. Fit was perfect too!” That three sentence review is just 72 characters and probably sounds pretty typical of the review content you analyze day to day.

As a shopper, this may well be helpful. You know that this person thought the product was comfortable and flattering, and the fit was good. But there really isn’t much underlying context (particularly obvious: what size are they?). If the reviewer had added just a few more words about why the fit was great or it was comfortable, then readers might have a better sense for whether they want the jacket.

When it comes down to it, the point of reviews is to help shoppers make the best purchasing decision possible. There needs to be meaningful information in that commentary in order to help your shoppers do that. Does review length automatically correspond to helpfulness? Let’s see what the data says.

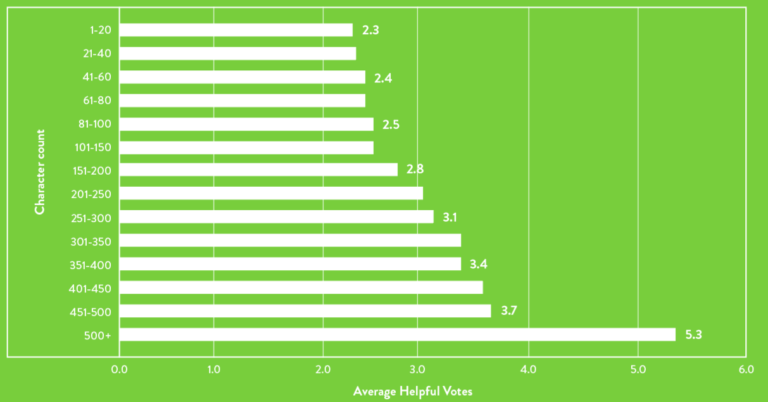

Impact of Review Length

Here we look at the distribution of review comment length. However, instead of sorting by rating, we examine the number of helpful votes each review received from other shoppers.

Reviews that were 80-100 characters long received 2.5 helpful votes on average. This gradually increases to 500+ characters, which receives more than double the number of helpful votes (5.3). So a huge difference.

While we don’t have the data to vividly and tangibly track helpfulness to sales and conversions, it’s hardly a giant leap to assume this relationship. Why? As we mentioned, reviews exist to help consumers make purchase decisions. Period. The more helpful the review, the more likely it is to do that.

So how do you help your customers write longer reviews? How do you motivate customers writing positive reviews to say more? We know that negative reviewers already have more to say, so how can you encourage customers that are happy to elaborate?

How to get longer 5-star reviews

Structure Review Form With This Goal in Mind

In this example, you can see Room & Board’s (which happens to be a PowerReviews customer) “write a review” form. As you can see, there are a series of prompts that a customer goes through before they are asked for their open-ended commentary.

These questions serve a few purposes:

-

- They help populate Pro/Con lists at the top of the review display that summarizes information contained in the reviews.

- They gather information about the customer that might be helpful to readers, like what type of home do you live in? Shoppers can then filter review responses that are most relevant.

- They plant seeds for the verbatim review commentary, which comes later in the form. Let’s look at this in a little more detail…

I specifically love the best uses option here. One of the most helpful topics to address in commentary is how consumers are using the product. By prompting customers to think about that by answering the question up top, you are helping them remember to include it in their free-text submission below.

Essentially, what Room and Board is doing here is guiding customers to provide better-quality reviews. Reviewers are not typically copywriters, product experts or even know the information other customers want. However, they are typically passionate about the product and motivated to provide content. So planting these ideas in their head before they get to the comment helps ensure they develop reviews that will actually help those consuming them.

Review Meter

We’ve been talking about review length a lot lately with our customers. We and they know how important it is. So we thought how can we help them generate the more detailed content that will drive more conversions?

In response, we developed our latest product innovation: the review meter.

As you can see in this interactive example, a little green bar grows as the customer types. Some explainer text beneath gently reminds customers to keep writing until they reach the minimum number of characters (this length can be customized to your own specification). Nothing stops the customer from submitting at that shorter length. But this provides some encouragement to provide just a little more detail – particularly if that customer has just been primed by you to talk about best uses or where they are using a product and why.

Once the customer hits that minimum character count, the meter lights up excitedly. The text also converts over to a “keep it up” message, further reinforcing positive behavior.

It’s very simple. But that small visual can go a long way in helping to encourage customers to talk just a little more about their experiences and/or why they like something.

Here’s what McKenna Rowe from DRINKS (featured in the image above) thinks.

"I love it! It’s elegant AND I don’t have to bug my engineers to enable it on my side! Since we implemented this, we’re already seeing much better quality reviews."

McKenna Rowe, Product Design Lead at DRINKS

Now, wine is HIGHLY subjective. So our sample reviewer (in the image) is providing context around how they usually like red blends, and they don’t usually like dry wine. Others reading then gain a sense for this reviewers’ palate. Really critical context.

If you’re a PowerReviews customer, this is super easy to enable. Your Customer Success Manager would be delighted to talk you through it so please reach out if you’re interested.

Your Questions Answered: Webinar Q&A

During the webinar, we got asked a handful of questions from our live audience. Thanks if you did ask us a question, we had some good ones. Here are my responses:

Is there data about where buyers are coming from (ie. ads, google searches, affiliate links)?

Great question. Unfortunately, we don’t have the data to go into how a reviewer ends up leaving a review if they organically visit a product page to do so. This is not the sort of thing we see our customers use digital advertising for though. They focus all that spend on converting consumers to buyers and driving product sales. Also, if you think about the dynamics of product reviews, a customer needs to buy an item in order to leave authentic and credible content. They can’t review a product without experiencing it.

For this reason, the most common method for soliciting product reviews is email. Between April 3 and June 7 2020, 60% of reviews were generated this way across all our customers (although at some ecommerce businesses, this can be as high as 90%). By comparison, 39% came in via organically navigating to the product page. A further 1% was solicited as a result of an SMS invitation. Why? The most common methodology for asking for reviews is following up a purchase with a request email.

Any idea why length is decreasing? Too much work to type?

Very difficult to say. If I had to venture a guess, you could perhaps put it down to the higher review submission levels. Perhaps individuals are providing multiple reviews as their ecommerce purchase volumes have risen. We also may be seeing more reviews coming from customers who aren’t used to buying online, and therefore aren’t used to sharing their experiences. But we don’t have the data to back that up, unfortunately.

As we say above, the change in real terms is not significant. You are talking a matter of a handful of words. So, in that sense, it’s not a big deal. But the reality is only a handful of words can make a huge difference. With review volumes up, we think this is a great opportunity to encourage better quality content, especially for your shoppers that might be new to providing this feedback.

How long is the minimum recommended review length?

It’s not black and white. This again is hard to say. It’s about finding the right length for your own business. Shoppers of higher consideration items are of course going to have more questions. So, in these cases, longer reviews will be of incrementally more value.

More generally, short reviews can still be valuable if they provide good information to convert browsers to buyers. But it’s just that longer reviews are more likely to be more valuable because they are more likely to provide this good information.

The data says 500+ character reviews are the most helpful. It’s not realistic for every review to be this detailed but this should be the aspiration.

I think if you look at where we are now as an industry (we have average review lengths of 130-140 characters), an increase of say 60ish characters to around 200 characters overall would make a big difference to conversion levels.

Is % change a comparison of YoY?

As mentioned above, the data we present starts at the end of February. We wanted to see the impact of the pandemic on ecommerce sales and review trends. Given when this was when the effects really started to kick in, this seemed a logical place to start.

With that being said, you are right we haven’t accounted for seasonality in these numbers so this may be an impacting factor. But we will try to call it out where we can - for example, the increase in volumes around Memorial Day, which tends to be a highly promotional time for retail.

For your totals and results are you using gross number of reviews or a truer number of reviews that removes duplicate and non-product related reviews?

All the reviews we analyze are product reviews. This is where our expertise is. Our analysis is on ratings and reviews content across an extensive and highly representative volume of product pages (1.5m+ product pages across 1,200+ brands).

We do help companies share the same content the same reviews across multiple sites (e.g. their own brand site, Amazon, Target etc.). In these instances, each review is only counted once in our analysis.

We support our customers with extensive human moderation resources primarily to ensure authenticity. This is actually a key difference between us and our main competitors. We exist to drive fully informed consumer purchase decisions and create transparency levels.

Our human moderators ensure no duplicate reviews are published as part of their checks.

Check out the recording of our webinar here or come back to PowerReviews.com/Insights next month for our July Market Trends Monthly Snapshot.